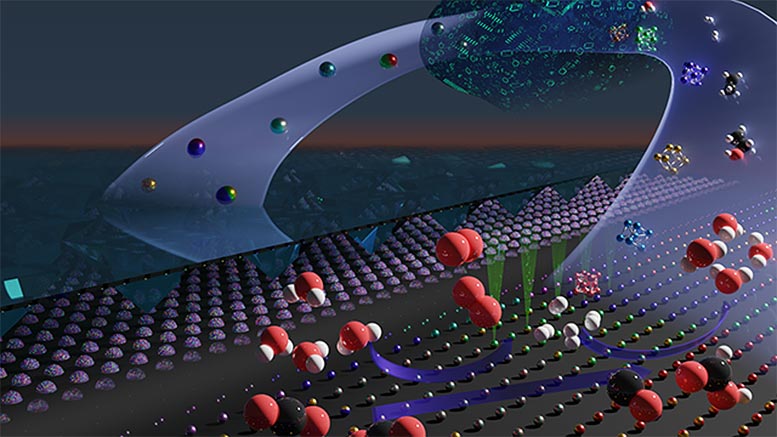

The Machine learning presents a roadmap to define new materials for any need, with implications in green energy and waste reduction. Scientists have dedicated more resources each year to the discovery of novel materials to fuel the world. As natural resources diminish and the demand for the higher value and advanced performance products grows, researchers have increasingly looked to nanomaterials.

Components like nanoparticles have already found their way into applications ranging from energy storage and conversion to quantum computing and therapeutics. But given the vast compositional and structural tunability nanochemistry enables, serial experimental approaches to identify new materials impose insurmountable limits on discovery.

Scientists of Northwestern University and the Toyota Research Institute (TRI) have successfully applied machine learning to guide the synthesis of new nanomaterials, eliminating barriers associated with materials discovery. The highly trained algorithm combed through a defined dataset to accurately predict new structures that could fuel processes in clean energy, chemical, and automotive industries. A Northwestern nanotechnology expert and the paper’s corresponding author Chad Mirkin murmured “we asked the model to tell us what mixtures of up to seven elements would make something that hasn’t been made before, and the machine predicted 19 possibilities, and after testing each experimentally, we found 18 of the predictions were correct.”

Locating the compound material’s genome

In the human genome, scientists were tasked with identifying combinations of four bases. But the loosely synonymous “material’s genome” includes nanoparticle combinations of any of the usable 118 elements in the periodic table, as well as parameters of shape, size, phase morphology, crystal structure, and more. Building smaller subsets of nanoparticles in the form of Megalibraries will bring researchers closer to completing a full map of a material's genome. According to Mirkin, what makes this so important is the access to unprecedentedly large, quality datasets because machine learning models and AI algorithms can only be as good as the data used to train them.

His team developed the Megalibraries by using a technique (also invented by Mirkin) called polymer pen lithography, a massively parallel nanolithography tool that enables the site-specific deposition of hundreds of thousands of features each second. Mirkin said that even with something similar to a “genome” of materials, identifying how to use or label them requires different tools. “Even if we can make materials faster than anybody on earth, that’s still a droplet of water in the ocean of possibility,” Mirkin said. “We want to define and mine the materials genome, and the way we’re doing that is through artificial intelligence.”

The AI earning applications are ideally suited to tackle the complexity of defining and mining the materials genome but are gated by the ability to create datasets to train algorithms in the space. Mirkin said the combination of Megalibraries with machine learning may finally eradicate that problem, leading to an understanding of what parameters drive certain material's properties.

Chemists Over-looked these complex materials

Megalibraries provide a map, which machine learning provides the legend. Using Megalibraries as a source of high-quality and large-scale materials data for training artificial intelligence (AI) algorithm, enables researchers to move away from the keen chemical intuition and serial experimentation typically accompanying the materials discovery process, according to Mirkin. “Northwestern had the synthesis capabilities and the state-of-the-art characterization capabilities to determine the structures of the materials we generate,” Mirkin said. “We worked with TRI’s AI team to create data inputs for the AI algorithms that ultimately made these predictions about materials no chemist could predict.”

Metallic nanoparticles show promise for catalyzing industrially critical reactions such as hydrogen evolution, carbon dioxide (CO2) reduction, and oxygen reduction and evolution. The model was trained on a large Northwestern-built dataset to look for multi-metallic nanoparticles with set parameters around phase, size, dimension, and other structural features that change the properties and function of nanoparticles. The Mega library technology may also drive discoveries across many areas critical to the future, including plastic upcycling, solar cells, superconductors, and qubits.

The team is now using the approach to find catalysts critical to fuelling processes in clean energy, automotive and chemical industries. Identifying new green catalysts will enable the conversion of waste products and plentiful feedstocks to useful matter, hydrogen generation, carbon dioxide utilization, and the development of fuel cells.Producing catalysts also could be used to replace expensive and rare materials like iridium, the metal used to generate green hydrogen and CO2 reduction products. Before the advent of mega libraries, machine learning tools were trained on incomplete datasets collected by different people at different times, limiting their predicting power and generalizability. Mega libraries allow machine learning tools to do what they do best; learn and get smarter over time.